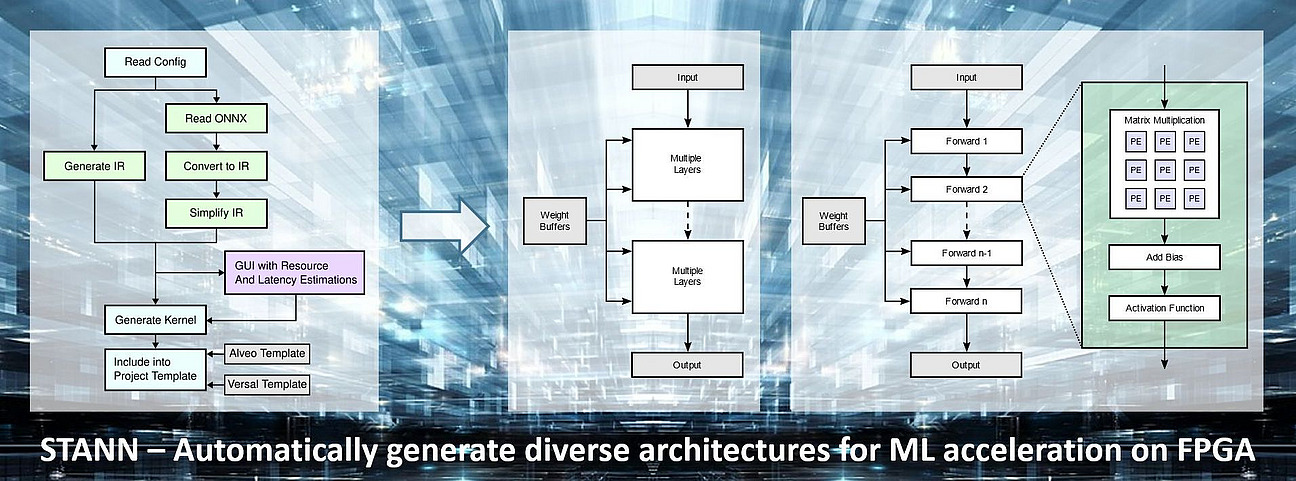

STANN – High-Level Design Flow for FPGA-based ML-Accelerators

While Deep Learning accelerators have been a research area of high interest, the focus was usually on monolithic accelerators for the inference of large CNNs. Only recently have accelerators for neural network training started to gain more attention. STANN (Synthesis Templates for Artificia lNeural Networks) is a template library developed in the Computer Engineering Group that enables quick and efficient FPGA-based implementations of neural networks via high-level synthesis. It supports both inference and training to be applicable to domains such as deep reinforcement learning. Its templates are highly configurable and can be composed in different ways to create different hardware architectures. In this way, the best trade-off between speed, resource requirements, energy efficiency and accuracy can be achieved for each individual application.

STANN is a library of C++ templates for high-level synthesis. Each supported neural network layer type consists of a namespace with sub-namespaces for each supported data type. These namespaces contain two functions: a forward function for inference and a backpropagation function to compute the gradients needed for training. Currently, supported layers are fully connected, convolutional, pooling, and activation layers. The layer functions expose template parameters to configure the basic structure of the layer, e.g., the number of inputs and outputs, as well as template parameters that influence the hardware architecture, e.g., the number of processing elements. In addition to the layer templates, STANN includes various other template functions for neural network training and inference – for example, different activations and losses, their respective derivatives, and optimizers like stochastic gradient descent.

Since floating-point calculations may be desirable for neural network training, but quantized neural networks are the state-of-the-art of inference, STANN supports both. Where possible, the data type can be changed simply with a template parameter. In cases where the implementation of a function is also dependent on the data type used for calculations, the different implementations of these functions are separated into different namespaces. This makes switching between data types simple and allows mixing data types if desired.

Further details and use case examples can be found in the papers listed below.

Source code is available available at:

https://github.com/ce-uos/STANN

Selected Publications

- Li, Y.; Rothmann, M.; Porrmann, M.:

Resource-Efficient Implementation of Convolutional Neural Networks on FPGAs with STANN.

In: 18th International Work-Conference on Artificial Neural Networks, IWANN 2025, A Coruña, Spain, 16-18 June, 2025, pp. 179-191.

DOI: https://doi.org/10.1007/978-3-032-02725-2_14 - Li, Y.; Mao, Y.; Weiss, R.; Rothmann, M.; Porrmann, M.:

High-Performance FPGA-Based CNN Acceleration for Real-Time DC Arc Fault Detection.

In: 18th International Work-Conference on Artificial Neural Networks, IWANN 2025, A Coruña, Spain, 16-18 June, 2025, pp. 192-204.

DOI: https://doi.org/10.1007/978-3-032-02725-2_15 - Rothmann, M.; Porrmann, M.:

FPGA-based Acceleration of Deep Q-Networks with STANN-RL.

In: 9th IEEE International Conference on Fog and Mobile Edge Computing, FMEC 2024, Malmö, Sweden. September 2-5, 2024, pp. 99-106.

DOI: doi.org/10.1109/FMEC62297.2024.10710277 - Mao, Y.; Weiss, R.; Zhang, Y.; Li, Y; Rothmann, M.; Porrmann, M.:

FPGA Acceleration of DL-Based Real-Time DC Series Arc Fault Detection.

31st Reconfigurable Architectures Workshop, RAW 2024, San Francisco, CA, USA, May 27th-28th 2024, pp.92-98.

DOI: doi.org/10.1109/IPDPSW63119.2024.00031 - Rothmann, M.; Porrmann, M.:

STANN – Synthesis Templates for Artificial Neural Network Inference and Training.

In: 17th International Work-Conference on Artificial Neural Networks, IWANN 2023, Ponta Delgada, Azores, Portugal, June 19-21, 2023, pp. 394–405.

DOI: doi.org/10.1007/978-3-031-43085-5_31 - Rothmann, M.; Porrmann, M.:

A Survey of Domain-Specific Architectures for Reinforcement Learning.

In: IEEE Access, vol. 10, pp. 13753-13767, 2022,

DOI: doi.org/10.1109/ACCESS.2022.3146518