Background to the study: When we look at the world around us, our brain not only recognizes objects such as "dog" or "car", but also understands higher-level spatial, semantic relationships - what is happening, where it is happening and how everything fits together. This information is essential for our understanding of human vision, but until now scientists have lacked the tools to analyze these complex processes.

"Using language models to understand visual processing sounds nonsensical at first," explains Prof. Dr. Tim C. Kietzmann from Osnabrück University and co-first author of the study. "However, language models are extremely good at processing contextual information and at the same time have a semantically rich understanding of objects and actions. These are important ingredients that the visual system could also extract when confronted with natural scenes."

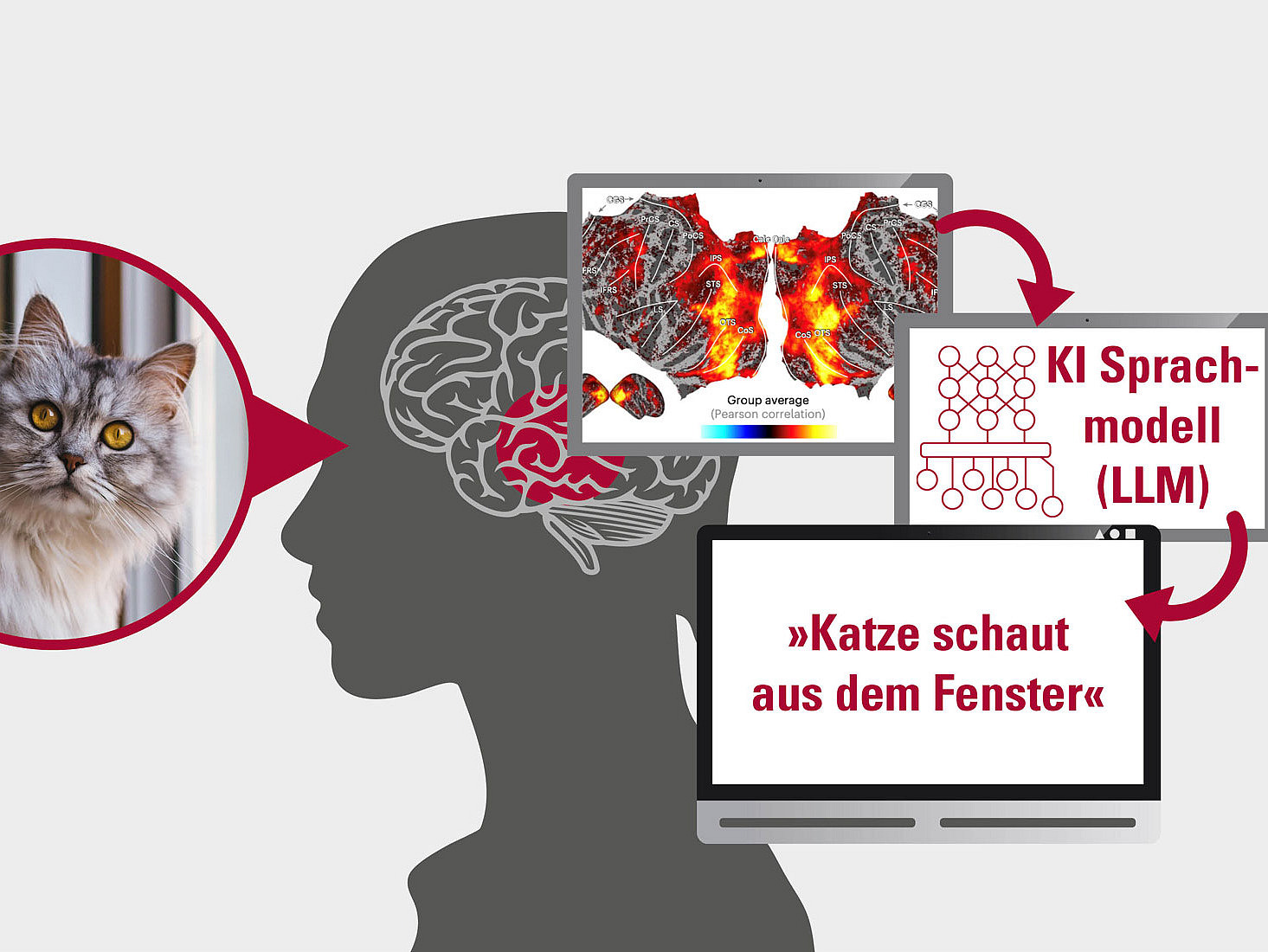

And indeed: linguistic scene descriptions, represented in large language models, show astonishing similarities to brain activity in the visual system while subjects look at the corresponding images in a magnetic resonance tomograph. So could it be that the task of the human brain's visual system is to process visual impressions in such a way that they are compatible with language? "It is conceivable that the brain tries to find a uniform language, a lingua franca, across different senses and languages. This would greatly simplify the exchange between brain areas," says Prof. Dr. Adrien Doerig, who is now a researcher at the FU Berlin.

The researchers went one step further: they trained artificial neural networks that can predict correct language model representations from images in a multi-stage process. These models, which process visual information in such a way that it can be decoded linguistically, can map the brain activity of the test subjects better than many of the currently leading AI models in the field.

The surprising correspondence between representations in AI language models and activation patterns in the brain is not only important for our understanding of complex semantic processing in the brain, but also points to possible ways in which AI systems can be improved in the future. Medical applications are also conceivable. The research team also succeeded in using AI to generate accurate descriptions of the images that the test subjects were looking at in the brain scanner. This mind reading points to possible improvements for brain-computer interfaces. Conversely, this new technology could one day also contribute to the development of visual prostheses for people with severe visual impairments.

About the paper: Adrien Doerig et al, High-level visual representations in the human brain are aligned with large language models, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-025-01072-0 https://www.nature.com/articles/s42256-025-01072-0

Further information for editors:

Prof. Dr. Tim C. Kietzmann, Osnabrück University

Institute of Cognitive Science

tim.kietzmann@uni-osnabrueck.de